Traditionally, Kubernetes has used an Ingress controller to handle the

traffic that enters the cluster from the outside. When using Istio, this is no

longer the case. Istio has replaced the familiar Ingress resource with new

Gateway and VirtualServices resources. They work in tandem to route

the traffic into the mesh. Inside the mesh there is no need for Gateways

since the services can access each other by a cluster local service name.

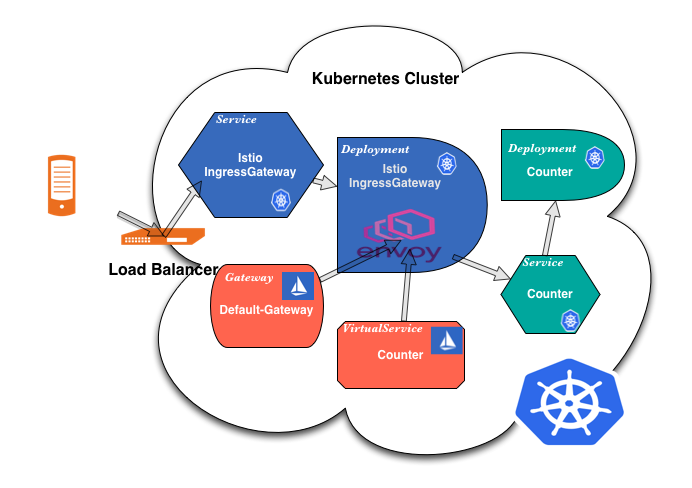

So how does it work? How does a request reach the application it wants? It is more complicated than one would think. Here is a drawing and a quick overview.

- A client makes a request on a specific port.

- The

Load Balancerlistens on this port and forwards the request to one of the workers in the cluster (on the same or a new port). - Inside the cluster the request is routed to the

Istio IngressGateway Servicewhich is listening on the port the load balancer forwards to. - The

Serviceforwards the request (on the same or a new port) to anIstio IngressGateway Pod(managed by a Deployment). - The

IngressGateway Podis configured by aGateway(!) and aVirtualService. - The

Gatewayconfigures the ports, protocol, and certificates. - The

VirtualServiceconfigures routing information to find the correctService - The

Istio IngressGateway Podroutes the request to theapplication Service. - And finally, the

application Serviceroutes the request to anapplication Pod(managed by a deployment).

The Load Balancer

The load balancer can be configured manually or automatically through the

service type: LoadBalancer. In this case, since not all clouds support

automatic configuration, I'm assuming that the load balancer is configured

manually to forward traffic to a port that the IngressGateway Service is

listening on. Manual load balancers don't communicate with the cluster to find

out where the backing pods are running, and we must expose the Service with

type: NodePort and they are only available on high ports, 30000-32767.

Our LB is listening on the following ports.

- HTTP - Port 80, forwards traffic to port 30080.

- HTTPS - Port 443, forwards traffic to port 30443.

- MySQL - Port 3306, forwards traffic to port 30306.

Make sure your load balancer configuration forwards to all your worker nodes. This will ensure that the traffic gets forwarded even if some nodes are down.

The IngressGateway Service

The IngressGateway Service must listen to all the above ports to be able to

forward the traffic to the IngressGateway pods. We use the routing to bring

the port numbers back to their default numbers.

Please note that a Kubernetes Service is not a "real" service, but, since we

are using type: NodePort, the request will be handled by the kube-proxy

provided by Kubernetes and forwarded to a node with a running pod. Once on the

node, an IP-tables configuration will forward the request to the appropriate

pod.

# From the istio-ingressgateway service

ports:

- name: http2

nodePort: 30000

port: 80

protocol: TCP

- name: https

nodePort: 30443

port: 443

protocol: TCP

- name: mysql

nodePort: 30306

port: 3306

protocol: TCP

If you inspect the service, you will see that it defines more ports than I have describe above. These ports are used for internal Istio communication.

The IngressGateway Deployment

Now we have reached the most interesting part in this flow, the

IngressGateway. This is a fancy wrapper around the Envoy

proxy and it is configured in the same way as the

sidecars used inside the service mesh (it is actually the same container). When

we create or change a Gateway or VirtualService, the changes are detected

by the Istio Pilot controller which converts this information to an Envoy

configuration and sends it to the relevant proxies, including the Envoy inside

the IngressGateway.

Don't confuse the IngressGateway with the Gateway resource. The Gateway

resource is used to configure the IngressGateway

Since container ports don't have to be declared in Kubernetes pods or

deployments, we don't have to declare the ports in the IngressGateway

Deployment. But, if you look inside the deployment you can see that there are

a number of ports declared anyway (unnecessarily).

What we do have to care about in the IngressGateway Deployment is SSL

certificates. To be able to access the certificates inside the Gateway

resources, make sure that you have mounted the certificates

properly.

# Example certificate volume mounts

volumeMounts:

- mountPath: /etc/istio/ingressgateway-certs

name: ingressgateway-certs

readOnly: true

- mountPath: /etc/istio/ingressgateway-ca-certs

name: ingressgateway-ca-certs

readOnly: true

# Example certificate volumes

volumes:

- name: ingressgateway-certs

secret:

defaultMode: 420

optional: true

secretName: istio-ingressgateway-certs

- name: ingressgateway-ca-certs

secret:

defaultMode: 420

optional: true

secretName: istio-ingressgateway-ca-certs

The Gateway

The Gateway resources are used to configure the ports for Envoy. Since we have exposed three ports with the service, we need these ports to be handled by Envoy. We can do this by declaring one or more Gateways. In my example, I'm going to use a single Gateway, but it may be split into two or three.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: default-gateway

namespace: istio-system

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- '*'

port:

name: http

number: 80

protocol: HTTP

- hosts:

- '*'

port:

name: https

number: 443

protocol: HTTPS

tls:

mode: SIMPLE

privateKey: /etc/istio/ingressgateway-certs/tls.key

serverCertificate: /etc/istio/ingressgateway-certs/tls.crt

- hosts: # For TCP routing this fields seems to be ignored, but it is matched

- '*' # with the VirtualService, I use * since it will match anything.

port:

name: mysql

number: 3306

protocol: TCP

Valid ports are, HTTP|HTTPS|GRPC|HTTP2|MONGO|TCP|TLS. More info about

Gateways can be found in the Istio Gateway docs

The VirtualService

Our final interesting resource is the VirtualService, it works in concert

with the Gateway to configure Envoy. If you only add a Gateway nothing will

show up in the Envoy configuration, and the same is true if you only add

a VirtualService.

VirtualServices are really powerful and they enable the intelligent

routing that is one of the very reasons we want to use Istio in the first place. However, I'm not going into it in this article since it is about the basic networking and not the fancy stuff.

Here's a basic configuration for an HTTP(s) service.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: counter

spec:

gateways:

- default-gateway.istio-system.svc.cluster.local

hosts:

- counter.lab.example.com

http:

- match:

- uri:

prefix: /

route:

- destination:

host: counter

port:

number: 80

Now, when we have added both a Gateway and a VirtualService, the routes

have been created in the Envoy configuration. To see this, you can kubectl

port-forward istio-ingressgateway-xxxx-yyyy 15000 and check out the

configuration by browsing to

http://localhost:15000/config_dump.

Note that the gateway specified as well as the host must match the information in the Gateway. If it doesn't the entry will not show up in the configuration.

// Example of http route in Envoy config

{

name: "counter:80",

domains: [

"counter.lab.example.com"

],

routes: [

{

match: {

prefix: "/"

},

route: {

cluster: "outbound|80||counter.default.svc.cluster.local",

timeout: "0s",

max_grpc_timeout: "0s"

},

...

Here's a basic configuration for a TCP service.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: mysql

spec:

gateways:

- default-gateway.istio-system.svc.cluster.local

hosts: # The host fields seems to only be used to match the Gateway.

- '*' # I'm using '*', the listener created is listing on 0.0.0.0

tcp:

- match:

- port: 3306

route:

- destination:

host: mysql.default.svc.cluster.local

port:

number: 3306

This will result in a completely different configuration in the Envoy config.

listener: {

name: "0.0.0.0_3306",

address: {

socket_address: {

address: "0.0.0.0",

port_value: 3306

}

},

Application Service and Deployment

Our request have now reached the application service and deployment. These are just normal Kubernetes resources and I will assume that if you have read this far, you already know all about it. :)

Debugging

Debugging networking issues can be difficult at times, so here are some aliases that I find useful.

Debugging networking issues can be difficult at times, so here are some aliases that I find useful.

# Port forward to the first istio-ingressgateway pod

alias igpf='kubectl -n istio-system port-forward $(kubectl -n istio-system

get pods -listio=ingressgateway -o=jsonpath="{.items[0].metadata.name}") 15000'

# Get the http routes from the port-forwarded ingressgateway pod (requires jq)

alias iroutes='curl --silent http://localhost:15000/config_dump |

jq '\''.configs.routes.dynamic_route_configs[].route_config.virtual_hosts[]|

{name: .name, domains: .domains, route: .routes[].match.prefix}'\'''

# Get the logs of the first istio-ingressgateway pod

# Shows what happens with incoming requests and possible errors

alias igl='kubectl -n istio-system logs $(kubectl -n istio-system get pods

-listio=ingressgateway -o=jsonpath="{.items[0].metadata.name}") --tail=300'

# Get the logs of the first istio-pilot pod

# Shows issues with configurations or connecting to the Envoy proxies

alias ipl='kubectl -n istio-system logs $(kubectl -n istio-system get pods

-listio=pilot -o=jsonpath="{.items[0].metadata.name}") discovery --tail=300'

When you have started the port-forwarding to the istio-ingressgateway, with

igpf, here are some more things you can do.

- To see the Envoy listeners, browse to http://localhost:15000/listeners.

- To turn on more verbose logging, browse to http://localhost:15000/logging.

- More information can be found at the root, http://localhost:15000/.

Conclusion

Networking with Kubernetes and Istio is far from trivial, hopefully this article has shed some light on how it works. Here are some key takeaways.

To Add a New Port to the IngressGateway

- Add the port to an existing

Gatewayor configure a new. - If it's a TCP service also add the port to the

VirtualService, not needed for HTTP since it matches on layer 7 (domain name, etc.). - Add the port to the

ingressgateway service. If you are using servicetype: LoadBalancer, you are done. - Otherwise, open the port in the load balancer and forward traffic to all worker nodes.

To Add Certificates to an SSL Service

- Add the TLS secrets to the cluster.

- Mount the secret volumes in the

ingressgateway. - Configure the

Gatewayto use the newly created secrets.

No comments:

Post a Comment